AI has transformed many areas, including healthcare, finance, and content creation. Tools like GPT-4 can write text, make art, and create music, which makes them key assets in the creative world. As AI-made content grows more common, we face a tricky and crucial question: Should we treat content written by AI as the property of the person who made it? This issue touches on law, ethics, tech, and creativity, and it affects creators, users, and society in big ways.

How AI Helps Create Content

To grasp the problem, we need to understand how AI helps create content. AI systems like GPT-4 are built to examine huge data sets, spot patterns, and write text that sounds like a human wrote it, based on what they’re given. These tools can produce all kinds of writing, from quick blog posts to in-depth reports often without much human help. The person operating the AI gives it a starting point or some direction, but the AI does most of the creative work.

The Argument for AI-Created Content as Property

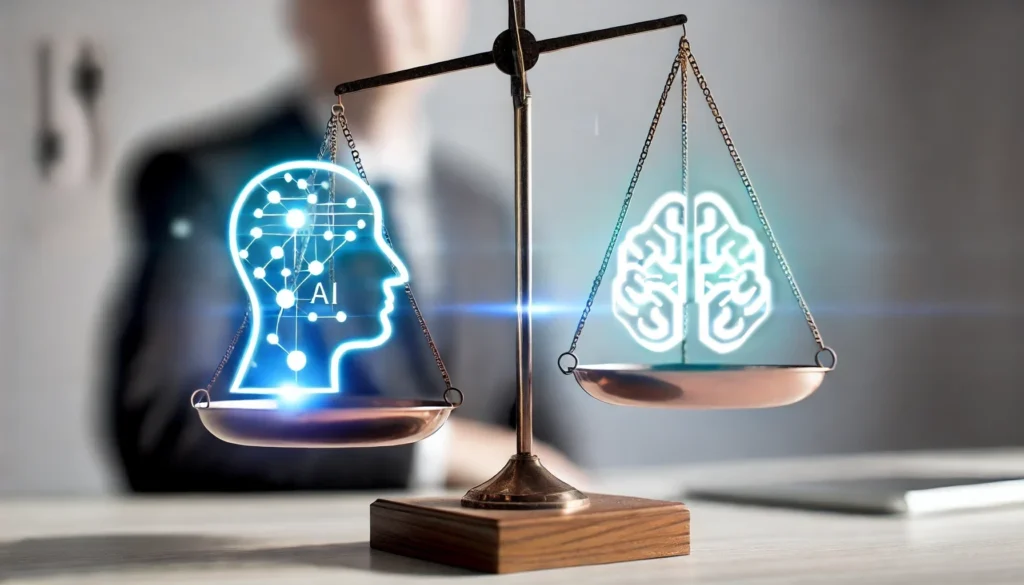

One argument for treating AI-generated content as the property of the person creating it stems from the concept of ownership through effort. The AI does most of the work in content creation, but the user gives the initial input, sets the rules, and chooses how to use the content. From this angle, the user plays a key part in the creative process much like a director guiding actors in a movie. So, you could say the content should belong to the user, since they’re the one who told the AI what to make.

What’s more, viewing AI-generated content as the user’s property fits with current intellectual property (IP) laws those about copyright. In many places, copyright protects original creative works. While AI-generated content might not meet the usual standards for originality or creativity, if the user’s input and guidance are enough, the final content could be seen as a team effort between the person and the AI. This would give the user ownership rights.

The Case Against AI-Generated Content as Property

On the flip side, there are strong reasons to think AI-generated content shouldn’t belong to the person who made it. One big worry is that AI-generated stuff isn’t creative or original. Systems like GPT-4 can’t think on their own or come up with new ideas; they just copy human language based on patterns in their training data. Because AI can’t make new work, some people say the content it creates shouldn’t be anyone’s intellectual property, not even the person who gave the input.

Another worry is the chance of misuse and taking advantage. If users own AI-made content, it might create a situation where people or businesses churn out loads of content with little work and then say it’s theirs. This could swamp the market with poor-quality stuff, make human creators’ work less valuable, and pose big challenges to protecting intellectual property. Also, there are moral issues about using AI in creative fields if it pushes out human workers.

The Middle Ground: A Team-Up Approach

Considering how tricky this problem is, finding a middle ground might be the best way forward. Instead of seeing AI-created content as owned by the user or not owned at all, we could think of it as a team effort between the person and the AI. In this setup, the user would have some rights to the content, like being able to use it, change it, and share it. But these rights wouldn’t be as broad as traditional IP rights.

For example, the user might have to give credit to the AI’s part in the creative process just like how a co-writer gets recognition in a team project. Also, there might be limits on selling AI-made content in fields where human creativity and new ideas are prized. This method would strike a balance between the rights and concerns of users, creators, and the public at large, while also tackling some of the moral and real-world issues tied to AI-created content.

Legal and Ethical Considerations

The legal scene for AI-made content is still changing, and people don’t agree on how to deal with it. Some places have started to look into this, each in their own way. Take the U.S. Copyright Office, for instance. They’ve said that stuff made by AI can’t get copyright protection. Meanwhile other countries are thinking about new laws to handle the problems that come up when AI makes content.

From an ethical point of view, we need to think about how AI-generated content affects society when it comes to creativity, jobs, and getting information. AI could make content creation easier and cheaper for everyone, but it also makes people worry about human creativity losing value and the chance for misuse. As AI keeps getting better, lawmakers, creators, and the public will need to keep talking about the ethical issues of AI-generated content. They’ll also need to come up with rules to make sure things stay fair, open, and responsible.

To wrap up

Whether AI-written content belongs to the person who created it isn’t a simple issue – it’s tricky and has many sides, without clear-cut solutions. While people make good points for both views, the most fair way might be to see AI-generated content as teamwork between the human and the AI. This approach would let users keep some rights to the content but also recognize that AI-generated work is unique. It would also help tackle potential moral and real-world issues.

As AI keeps changing the creative industries, we need to come up with new legal frameworks and ethical guidelines that match how content creation is evolving. This way, we can make sure AI is used to help society while also looking out for the rights and interests of human creators.